The Buyer’s Guide to AI Customer Research Platforms

A complete guide on how to evaluate, compare, and choose the right AI research platform for your team.

Customer research has long forced teams into an uncomfortable choice: move fast with shallow surveys, or go deep with expensive, time-consuming interviews. AI has introduced a third option.

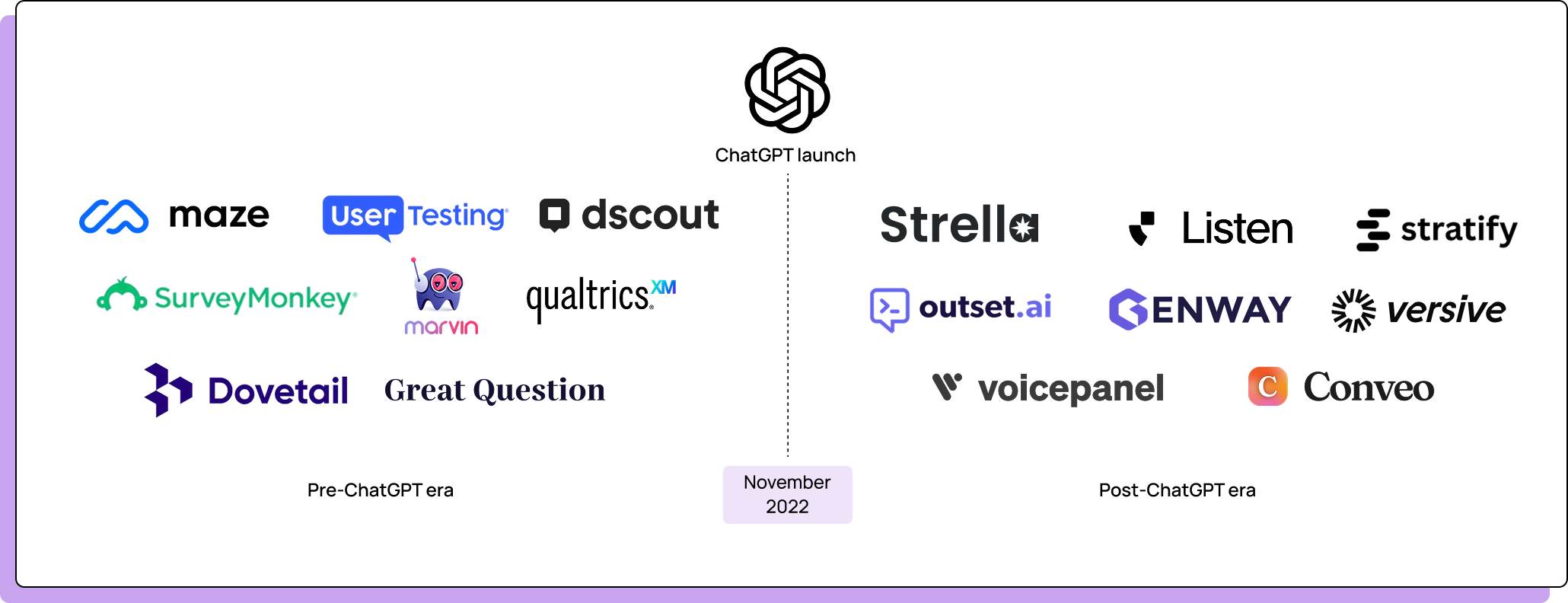

Since late 2022, a new generation of research platforms has emerged — tools built from the ground up to use AI not as a feature, but as a foundation. These platforms promise qualitative depth at quantitative scale.

The challenge is that not all research platforms can deliver on this promise. Some platforms bolt AI onto legacy architectures, hoping to modernize without rebuilding. Others are designed from scratch around what AI makes possible. This distinction shapes the quality of research you can do, the speed at which you can do it, and the insights you will get.

Buyer’s Guide Key Takeaways

The First Question: AI-native or Legacy?

Before comparing features, pricing, or integrations, answer one question: Was the platform built before or after ChatGPT’s release in November 2022?

The date matters because it signals architectural intent. Platforms built after 2022 could design around modern AI capabilities from day one. Platforms built before had to retrofit.

What “AI-native” Means

An AI-native platform treats AI as its foundation, not an enhancement.

Legacy platforms, by contrast, were designed before modern LLM capabilities existed. They carry years of technical architecture and an established UX paradigm. When they add AI, they are retrofitting modern capabilities onto infrastructure built for a different purpose.

This distinction shapes every decision a platform makes—from how interviews are conducted to how insights are surfaced.

Why Legacy Platforms Struggle to Pivot

If a platform was not built with AI at its core, catching up is harder than it appears.

Technical debt: Legacy platforms have years of infrastructure built around traditional research workflows. Retrofitting AI means working around existing constraints rather than designing for new possibilities.

Existing commitments: These companies have customers, workflows, and business models built on the established approach. A rapid pivot risks disrupting their existing base.

Incremental thinking: When adding AI to something that already exists, teams naturally think in terms of enhancement rather than reinvention. They ask “how can AI make this 10% better?” instead of “what would we build from scratch?”

The Implication

Choosing between AI-native and legacy is a philosophy decision as much as a technology decision.

It determines whether you are investing in the future of research or funding a platform trying to catch up from its past.

Diagnosing Your Research Problems

Before diving into evaluation frameworks, it’s helpful to reflect on the main types of problems teams who rely on research typically face.

Data Freshness: The Ongoing Gap

Your team has research, but it is months or years old. Every reference comes with an asterisk: “This was from 18 months ago, so take it with a grain of salt.” Decisions get made on stale information because fresh research takes too long.

Root cause: Research velocity is too slow. By the time studies are completed, insights are already aging.

Lack of Data: The Hidden Gap

Your team makes decisions without customer input — not because they do not want research, but because there is none. The HiPPO (highest-paid person’s opinion) wins by default. Decisions are business-centric rather than customer-centric.

Root cause: The barrier to conducting research is too high. It is too expensive, too slow, or requires skills your team lacks.

Data is Too Expensive: Reserved for Big Bets

Your team does research, but only for major initiatives. Product launches get customer input. Quarterly planning gets customer input. But the dozens of smaller decisions made every week happen without research because the cost cannot be justified.

Root cause: Research is treated as a special occasion rather than a regular practice. The cost structure only works for high-stakes decisions.

Lack of Qual Data: Surface-Level Only

Your team has plenty of data—analytics, NPS scores, survey results. You know what customers are doing. But you rarely understand why. The data addresses surface questions but does not inform deeper decision-making.

Root cause: Quantitative methods dominate because they are cheaper and faster. Qualitative research is too expensive to run regularly.

Which Problem Resonates Most?

Most teams suffer from at least one of these; many suffer from several. Diagnosing which category of problem your team faces will help you evaluate platforms through the right lens.

Regardless of the selection, an AI-native customer research platform is well-positioned to address all four:

What to Optimize For: Speed, Cost, and Depth of Insight

If you are committed to evaluating AI-native platforms, it helps to have criteria for comparison. Three metrics matter most:

- Speed to insight: How quickly can you go from research question to actionable finding?

- Cost to insight: What is the total investment (money, time, effort) required to get there?

- Depth of insight: Are you getting insights that previously would have been impossible to get without human moderation?

These metrics force you to evaluate the impact a tool will have throughout the entire research lifecycle, instead of focusing on any singular feature in isolation. A platform might have impressive AI guide creation, but if its analysis tools are clunky or recruitment takes weeks, one of these metrics will suffer. The best platform is the one that optimizes across all three criteria.

Questions to Ask

When evaluating any platform, ask yourself:

- What is the average timeline from study kickoff to stakeholder presentation?

- What is the cost per study, including my team’s time?

- How much more high quality research (if any) will I be able to run per quarter?

- Where does this platform add the most value in my current workflow?

- Where are the gaps? What will I still have to do manually?

These questions and the ensuing conversations they’ll produce will prove more useful than any feature comparison chart.

The Five Stages of a Research Study

A complete AI-native platform should add value at each stage of the research lifecycle:

If a platform excels at one stage but fails at another your total speed, cost, and depth of insight can suffer. Your platform should be optimizing each stage of the process.

Stage 1: Research Plan Creation

Leverage AI-assisted guide building to transform high-level objectives into structured, non-biased discussion questions and branching logic in minutes.

Stage 2: Recruitment

Automate the sourcing and screening of high-quality participants through integrated panels or private user groups to eliminate the traditional multi-day scheduling bottleneck.

Stage 3: Moderation

Deploy dynamic AI moderators that conduct natural, real-time conversations to probe deeper into participant "whys" without the limitations of static surveys.

Stage 4: Analysis

Shift from manual transcript review to AI-powered synthesis that instantly surfaces cross-session themes, pain points, and supporting evidence at scale.

Stage 5: Presentation

Translate raw data into stakeholder impact by generating automated executive summaries and video highlight reels that drive faster organizational decision-making.

Deep Dive

The downloadable PDF version of this guide includes a detailed "What Good Looks Like" breakdown for each stage to help you audit your current tools.

Evaluating the AI-native Landscape

There are a handful of key players to evaluate in the AI-native research platform landscape. For reference, a few were included in the comparative image at the beginning of this guide.

What They Have in Common

All of these AI-native platforms:

- Were built after the ChatGPT inflection point

- Use LLMs as a core component of their moderation approach

- Aim to deliver qualitative depth at scale

- Promise faster, more cost-effective research than traditional qualitative methods

Where They Differ

These platforms tend to differ in:

- Moderation approach: Some use start-and-stop formats; others prioritize

continuous conversations - Study types supported: the types of studies you can run as well as the stimuli

supported vary widely - Recruitment flexibility: Options for using integrated panels versus bringing your own users

The Moderation Bottleneck

For some research stages, teams can use general-purpose AI tools like ChatGPT, Claude, or Gemini as viable alternatives. For the moderation stage, however, there are few solutions capable of taking on the responsibilities of a competent moderator.

Because it’s the most underserved stage in the research process, small differences in moderation quality can have an outsized impact on the speed, cost, and depth of insights. As such, moderation is currently the highest-leverage stage of the research lifecycle to focus on when evaluating AI research platforms.

Two Approaches to AI Moderation

Two distinct approaches to AI moderation have emerged across AI-native platforms:

- AI-moderated surveys: AI adds intelligence to a survey-like experience

- AI-moderated conversations: AI conducts something closer to an interview

Both improve on unmoderated research methods. Both help teams do better research faster. But to be clear, they are not the same.

The Key Difference for Participants

The most important difference between the two approaches is how they feel to participants.

AI-moderated surveys present a question, wait for the participant to press a button to start recording and then another button to submit their answer before moving on to the next question.

AI-moderated conversations flow continuously. Participants respond in real time without discrete start/stop actions between each exchange.

The Key Difference for Research Outcomes

The richness of insights, and as a result the impact of research, depends on the feedback you’re able to gather from participants. The closer the participant experience feels to a natural interaction, the more likely they’ll provide the type of deep, think-aloud responses that make qualitative research valuable.

AI-moderated surveys can fall short because the start-and-stop rhythm resembles how people interact with surveys, not how they interact with other people. This shift into “survey mode” prevents the organic flow that produces qualitative depth. The pauses also make it easier for participants to craft responses designed to please or look up answers elsewhere, increasing fraud risk.

On the other hand, the continuous nature of AI-moderated conversations encourages more natural responses while making it harder to game the interview. When research success relies on understanding what customers really think, this difference in participant experience can prove crucial – especially as that advantage compounds across the scale of research AI-native platforms enable.

What to Look For in AI Moderation

When evaluating AI moderation, prioritize:

How to Test This Yourself

- Request a demo where you can participate as a respondent

- Pay attention to how the interaction feels

- Notice whether follow-up questions feel relevant and probing

- If possible, ask to see sample transcripts from real studies

The difference between platforms becomes easier to perceive from the receiving end. When possible, test with real participants to see how they interact with the AI moderation.

What Changes When You Adopt an AI-native Platform

When you bring on a platform that helps address the most prevalent type of research problems, three things shift about the way teams conduct research:

Quality: The Average Study Gets Better

When AI handles moderation well, the average quality of your research increases—not just flagship studies, all studies. You get richer data from every participant, which means better insights from every project. This directly impacts your average depth of insight: you start getting answers that previously only in-depth interviews could surface.

Research quality today is often uneven: the major study gets resources and attention; the quick pulse check gets rushed. With good AI moderation, even quick studies produce qualitative depth.

Quantity: More Research Gets Done

When the barrier to execution drops, teams do more research. Studies that previously would not have happened—because they were difficult to justify the cost—become feasible.

The question shifts from “can we afford to do research?” to “which questions do we want to answer first?”

Frequency: Research Becomes Continuous

The biggest shift is from research-as-event to research-as-practice. Instead of quarterly studies that inform quarterly decisions, you gather customer input on an ongoing basis.

This keeps your team connected to current customer reality, not stale snapshots.

The Result

When quality, quantity, and frequency all improve, you get a compounding effect.

More high-quality research gets done more frequently—leading to better customer-backed decisions across the organization.

Evaluation Checklist

We’ve compiled a 23-point evaluation checklist for your team, available in the full guide below.